- In the last post, I covered Datastore Clusters, Storage DRS, and Pure Storage FlashArray from a Performance/Capacity Threshold approach.

That post covered some reasons why a vSphere administrator might find it desirable to use Datastore Clusters, possibly Storage DRS, and how those Storage DRS thresholds and automating when using one or more storage array.

There isn’t a lot of information out there on SPBM and VMware Storage DRS, so I decided to dig a little, tinker around in the lab, and post something here.

Storage Policy Based Management & Storage DRS

In my experience, the vast majority of Datastore Clusters I have seen in use have typically been on Datastores presented from the same array, and of the same type/tier of storage. Administrators typically do not want to Storage vMotion workloads from one array to another unless absolutely necessary. But what if there were a way to have a Datastore Cluster provide Datastores from multiple arrays, with additional control over the placement and migration of workloads?

Enter Storage Policy Based Management (SPBM), Storage DRS, and

For traditional vSphere Datastores (VMFS/NFS), SPBM tagging can be used to categorize/tag different Datastores. Storage DRS has the ability to add policy enforcement to allow or prevent virtual machines and their disks from moving to Datastores that do not match the SPBM policy assigned to a virtual machine or disk.

Kyle Grossmiller has a video that covers Datastore tagging and tiering here: https://www.youtube.com/watch?v=K3XOw3Io5hs

Kyle does a good demonstrating this behavior, but I wanted to dig deeper into the requirements and behavior.

SPBM & SDRS Requirements

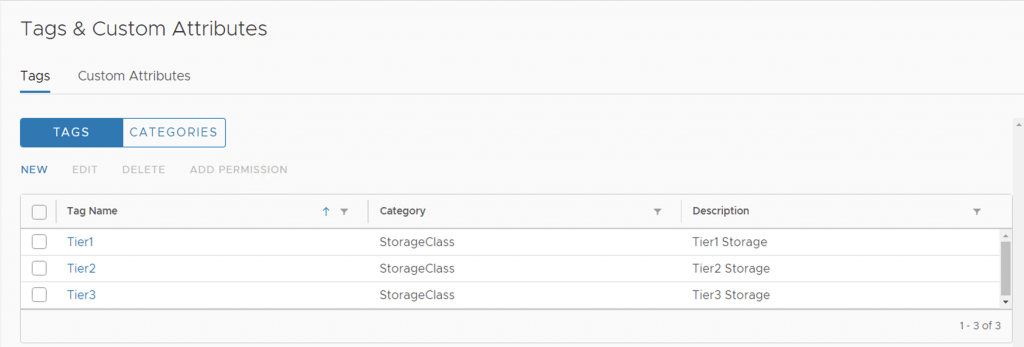

For Storage DRS to be able to use SPBM Policies one or more categories with tags must be created, datastores must be assigned a tag or tags, and tag-based storage policies must be created so they may be assigned to virtual machines.

Tiering – 1st Requirement, Tag/Category Entries & Datastore Assignment

Tags and Custom Attributes are the core component that makes this pseudo-tiering work. Tags can designate different storage types according to just about any custom classification (performance, use case, etc). Tags can be used by Storage Policies to help dictate placement of VMs with specific policy assignments. For Storage Policies to use Tags, those Tags must be created.

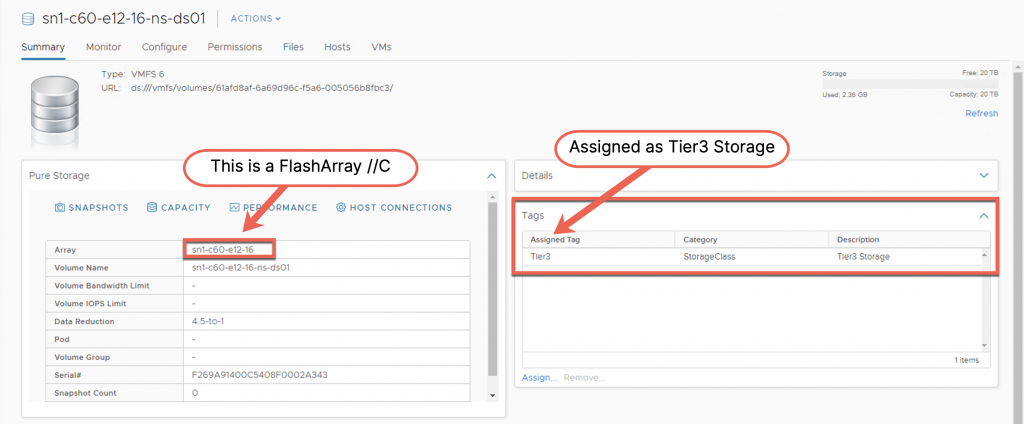

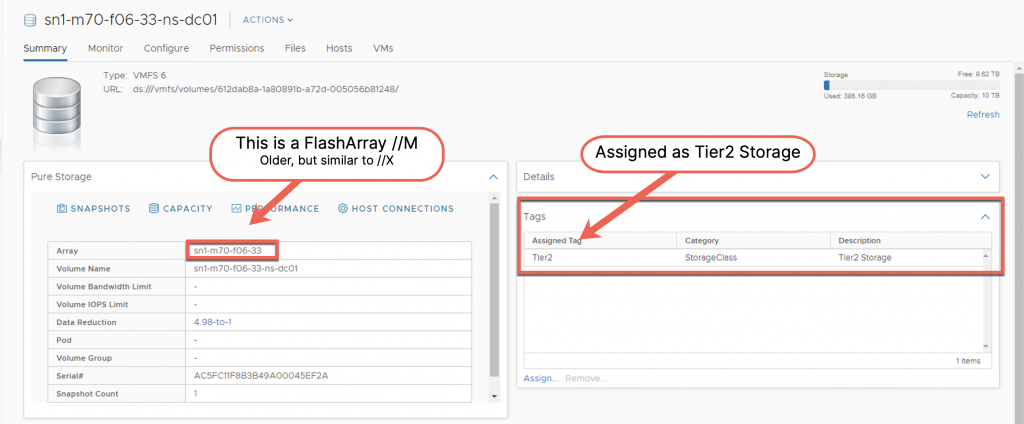

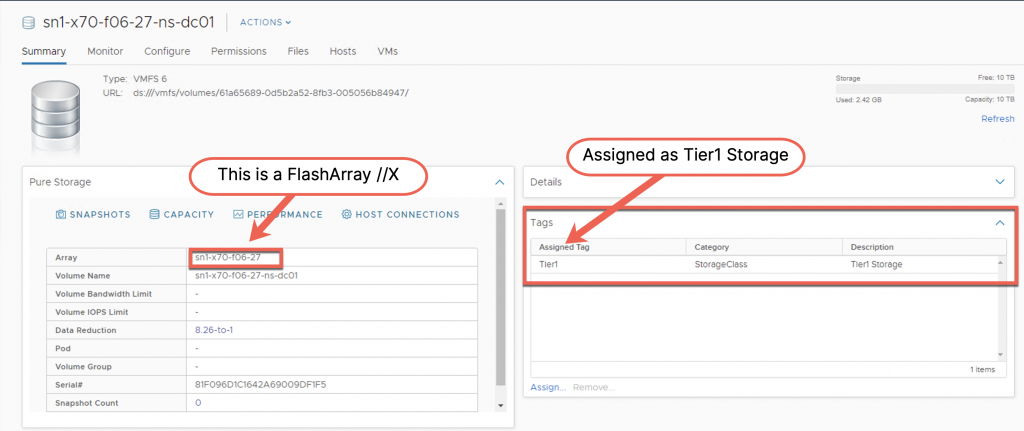

Above, 3 Tags have been created (Tier1, Tier2, & Tier3). These Tags are assigned to the same Category, called StorageClass. In our “tiering” example, we will assign each of these Tags to Datastores based on the type of Array being used (Tier1- FlashArray //X, Tier2 – FlashArray //M, and Tier3 – FlashArray //C).

The purpose of tagging these datastores, is that when combined with a Storage Policy Based Management Policy Assignment, these Tags can dictate where VMs are placed, either as part of a provisioning or Storage vMotion Process.

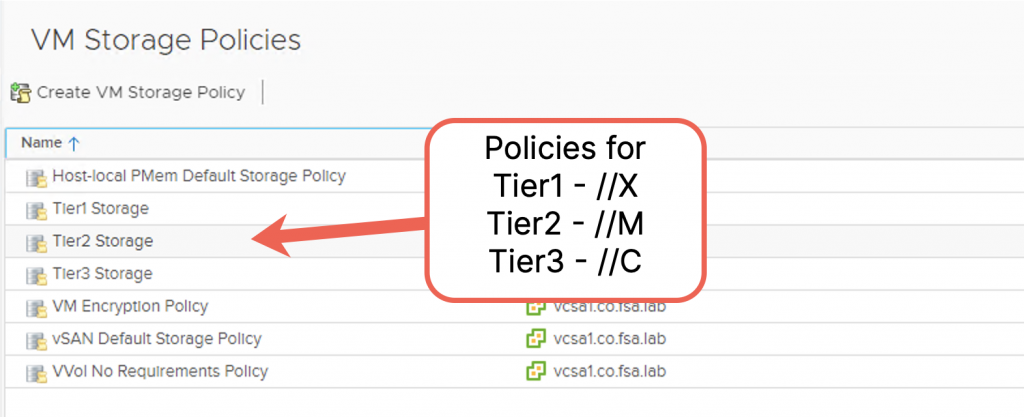

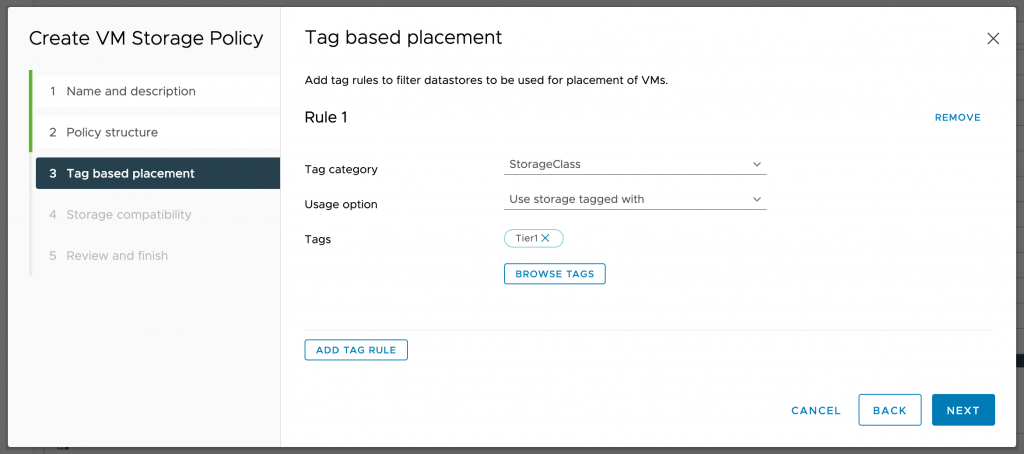

Tiering – 2nd Requirement, Tag-Based SPBM Policies

A Tag-based SPBM Policy that aligns with the tags should be created if a virtual machine or its disks are to reside on a specific Datastore or Datastores in a Datastore Cluster.

Create a Tag-based Storage Policy for each of the Tags being used. In this example 3 policies are created, one for each Tier of storage presented by FlashArray

Tiering – 3rd Requirement, VM Datastore Cluster & Storage DRS (SDRS) Deployment

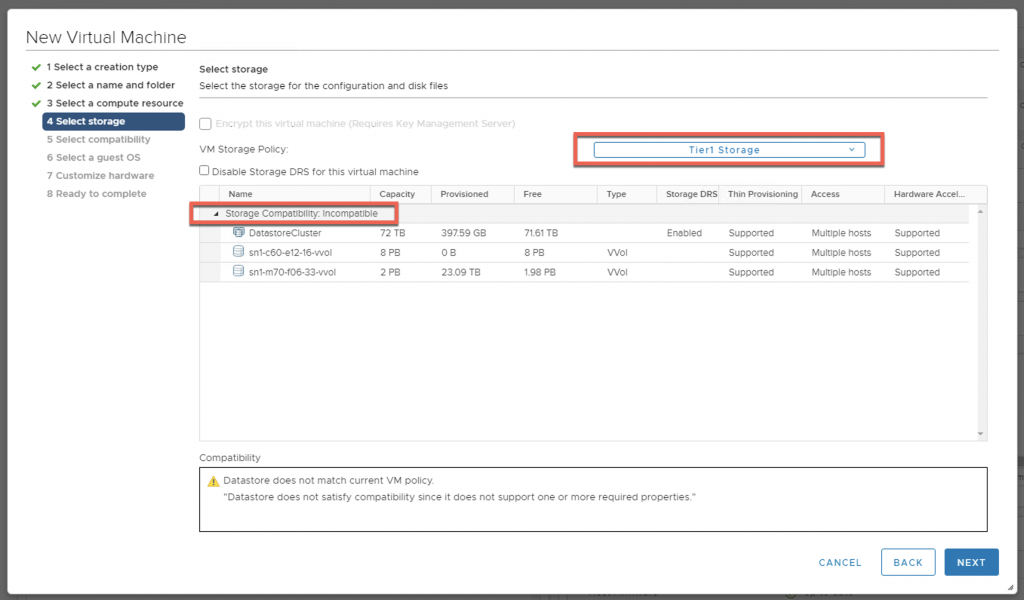

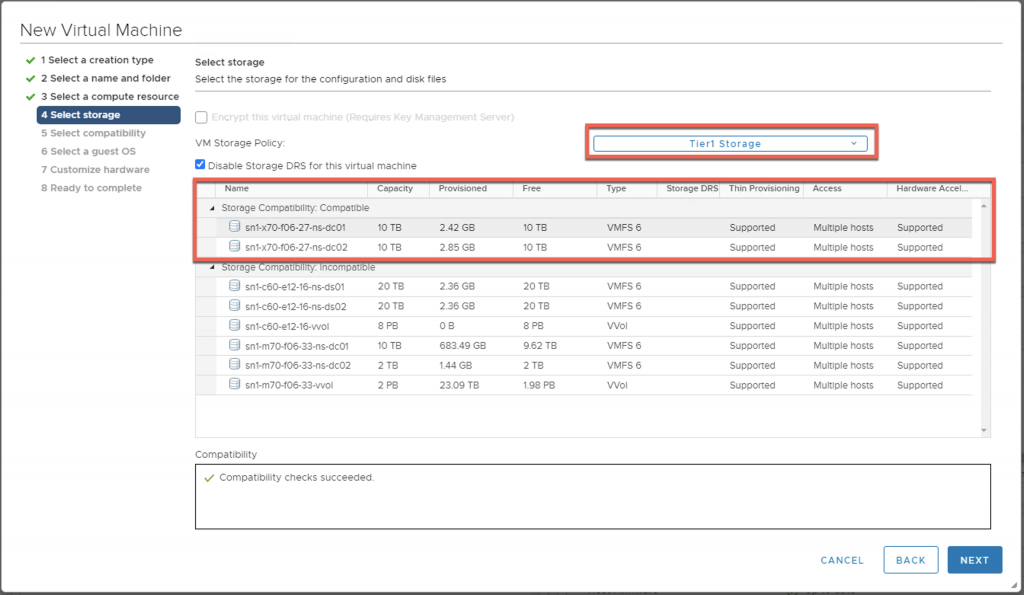

VM provisioning is going to do its best to place VMs on datastores that meet their Storage Policy adherence. When deploying a VM to a datastore, and choosing a Tier1 Storage Policy, only datastores that meet the Storage Policy criteria can be used. When deploying a VM, choose a Tag-based Storage Policy (Tier1 here) to place the VM on an appropriately tagged datastore.

*Note: For Datastore Clusters have have multiple Tag assignments, no storage will be shown as compatible. To work around this, temporarily Disable Storage DRS for this virtual machine.

*Note we will have to reenable Storage DRS for this VM later. Without reenabling Storage DRS for a VM, the VM will not move “automatically” at a later time when conditions are satisfied that require the VM to move.

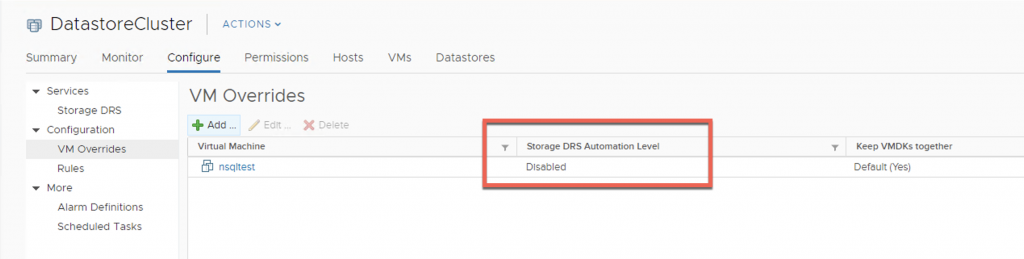

To remove the VM override, look at the VM Overrides section of the Storage DRS Configuration.

Choose the VM, and select Edit to remove the Override

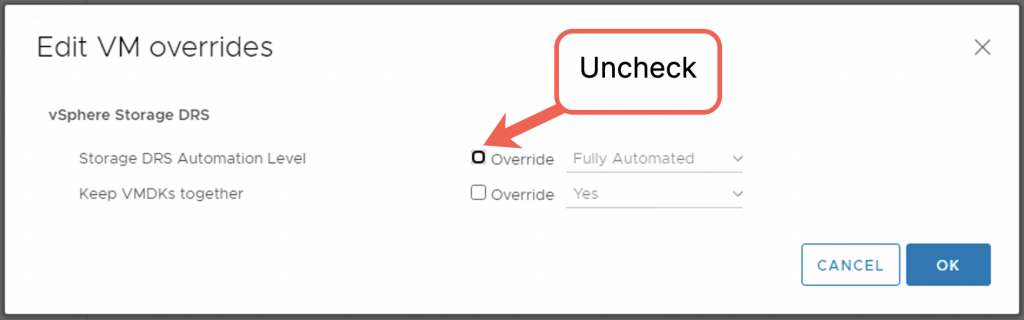

By unchecking Override, the VM can move automatically when a Storage DRS operation occurs.

Tiering – 4th Requirement, Storage Profile Enforcement

A quick summary of where we’re at includes:

- Tags have been created for 3 different storage types

- Storage Policy Based Management Polices have been created to align with each of those Tags denoting different storage types

- An individual VM or multiple VMs have been:

- Assigned a Storage Policy for a given tier of storage

- Deployed to an appropriately tagged Datastore in the Datastore Cluster that meets the Policy criteria

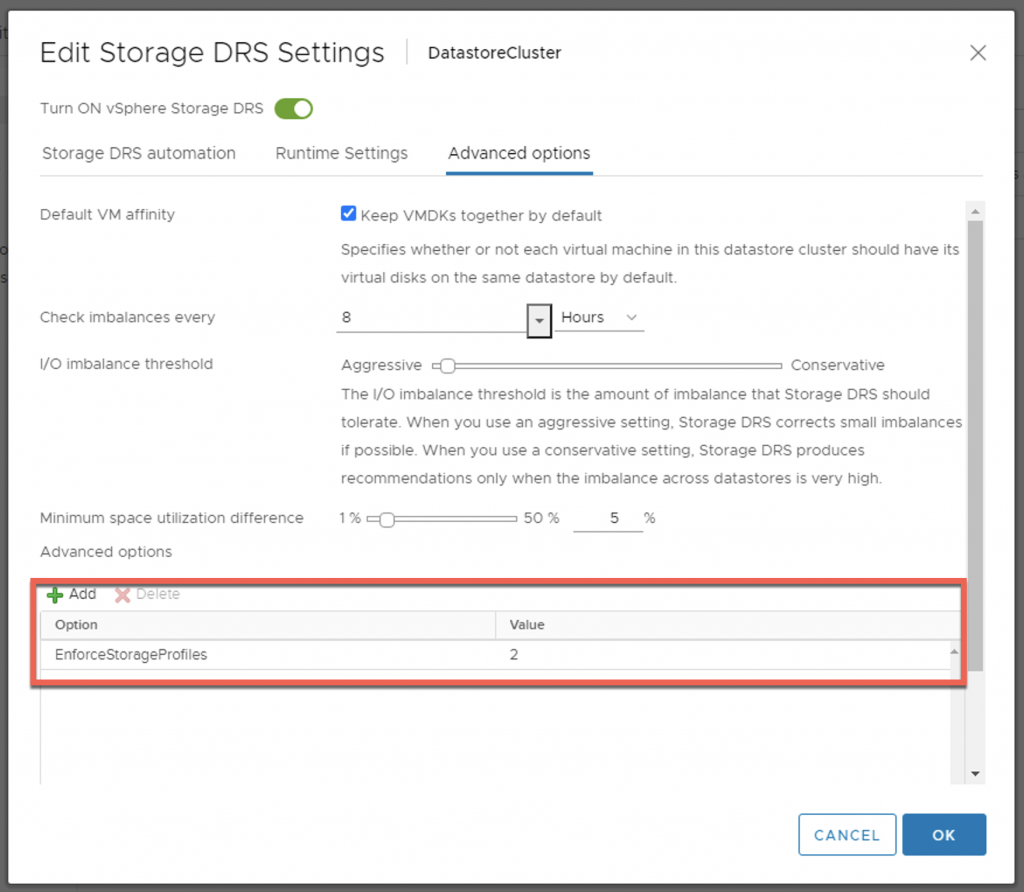

The Storage DRS configuration for the Datastore Cluster must have an advanced setting applied that forces VM movement only to a Datastore in the Datastore Cluster where the Policy and Tag assignment match.

This advanced setting is EnforceStorageProfiles = 2

The Storage DRS FAQ KB at VMware.com provides more detail as it pertains to this setting (https://kb.vmware.com/kb/2149938)

EnforceStorageProfiles

To configure Storage DRS interop with SPBM, below options need to be set:

- 0 – disabled (default)

- 1 – soft enforcement

- 2 – hard enforcement

Option 2 will not allow a VM to move to a Datastore that doesn’t satisfy the Storage Policy Assignment. Option 1 will provide a best effort.

Tiering -5th Requirement, Conditions invoking movement/tiering

For Storage DRS VM movement to occur, one of a few conditions must be satisfied

- A datastore must meet a performance threshold to invoke VM movement – FlashArray seldom meets this threshold, and this is typically disabled

- A datastore must meet a capacity threshold to invoke VM movement – This is entirely possible dependent on datastore sizes, vmdk type (thin/LZT/EZT), & sheer number/size of VMs provisioned

- A datastore must be placed in maintenance mode to invoke VM movement – This process expects to evacuate a datastore, and VMs will move to other datastores dependent on the EnforceStorageProfile configuration

Storage DRS does not automatically move a VM with a policy assignment when it resides on a datastore that does not meet that policy’s criteria. VMware did mention at one time they might be adding this capability, but it has not been delivered at this time.

Additional work using something like the VMware Event Broker Appliance could be used to check VM’s periodically, and Storage vMotion them appropriately, but I’m not aware of any work done to perform that at this time.

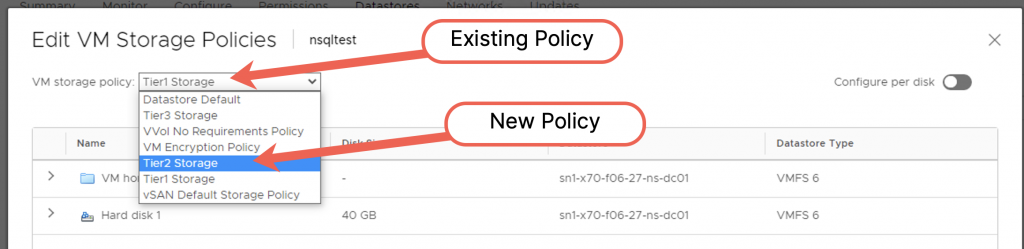

To move a VM (Tier to different storage) a few things would need to occur.

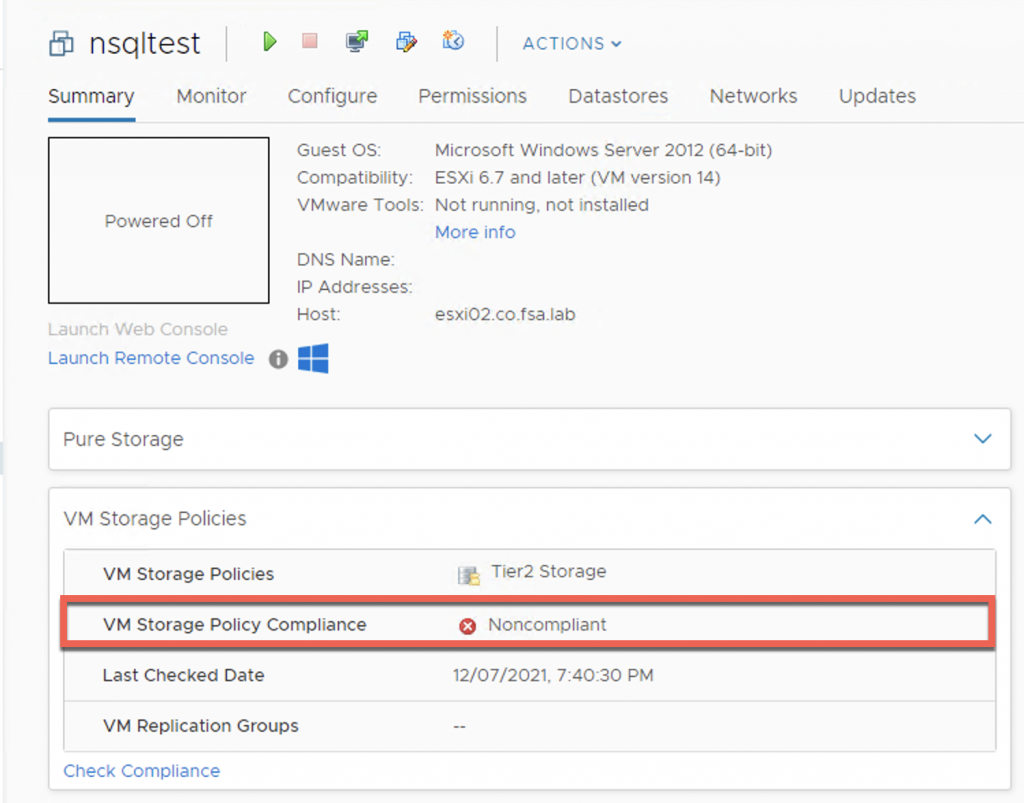

1 – Assign a new Storage Policy to a VM and its hard disks

The VM will then show as non-compliant as the policy does not match the datastore it resides on.

The VM will then show as non-compliant as the policy does not match the datastore it resides on.

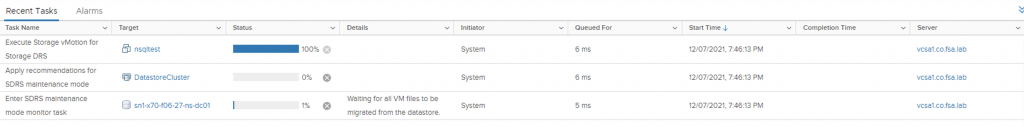

2 – Put the datastore it is on in Maintenance Mode (will attempt to move everything) or wait until the datastore fills up.

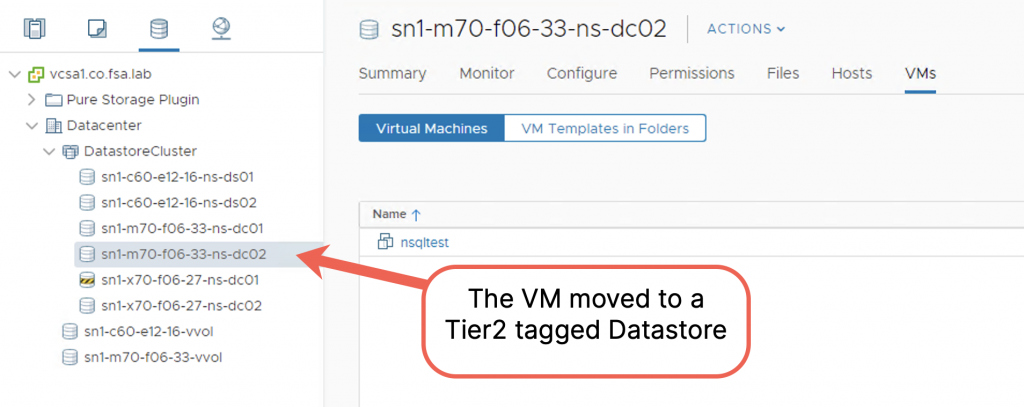

When putting a datastore in Maintenance Mode, or if that datastore overcomes the capacity threshold, VMs will automatically move to datastores that match the Storage Policy assignments for each VM.

When either a Datastore Maintenance Mode, or capacity threshold event occurs the EnforceStorageProfiles = 2 value will move the VM to an appropriately tagged datastore (provided there is capacity)

Summary

Storage Profiles, Tagged Datastores, Datastore Clusters, and Storage DRS can provide an appropriate mechanism to tier VMs across different types of storage with relative ease. While it would be nice for VMware to complete this feature by checking policies and moving accordingly, typical capacity growth or Datastore Maintenance Mode, can still provide a level of automated tiering across storage types.

*Note: Keep in mind that Storage DRS from one Datastore to another on the same FlashArray is going to be accomplished very quickly using the VMware API for Array Integration (VAAI). Storage DRS operations the invoke movement to a Datastore backed by a different array are not going to take advantage of the VAAI offloads, and will take longer to complete.

Reference Links:

- VMware Storage DRS FAQ KB: https://kb.vmware.com/s/article/2149938

- VMware Storage DRS integration with Storage Profiles KB: https://kb.vmware.com/s/article/2142765

- VMware Storage DRS integration with Storage Profiles: https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.resmgmt.doc/GUID-9080835B-1F3C-46E8-9586-066992083A35.html (Selectable for vSphere 7.0/6.7/6.5)

*Note: Tags may be used on any VMFS/NFS storage supported by vSphere and is not specific to Pure Storage FlashArray