A question was asked the other day about the performance behavior of a virtual machine after a vMotion on a 2 Node vSAN configuration. The question basically was:

“Should there be a degradation in read performance after a virtual machine is vMotioned to the alternate host in a Hybrid 2 Node vSAN configuration?”

During normal operations, the user was seeing a large number of IOPS on a read workload, but when moving the virtual machine to the alternate host, the read IOPS dropped by a large amount. After some time, the performance returned to normal. When they vMotioned the virtual machine back to the original host, they experienced the same degradation of read performance. Again, after some time, the performance returned to normal.

In fact, this is the correct out-of-the-box behavior for 2 Node vSAN configurations. To understand why, it is important to know how write and read operations behave in 2 Node vSAN configurations. This post covers vSAN 6.1 and 6.2. Updated: This also includes vSAN 6.5, 6.6, & 6.7.

Writes Are Synchronous

Writes Are Synchronous

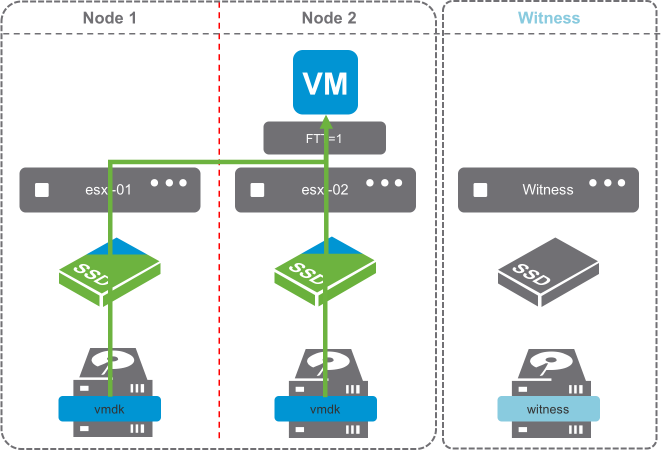

In vSAN write operations are always synchronous. The image of a Hybrid vSAN cluster to the right, shows that writes are being written to Node 1 and Node 2, with Metadata updates being written to the Witness appliance. This is due to a Number of Failures to Tolerate policy of 1.

Notice the blue triangle in the cache devices? That’s 30% of the cache device being allocated as the write buffer. The other 70% of the cache device is green, demonstrating the read cache. It is important to note that All-Flash vSAN clusters do not use the cache devices for read caching.

Writes go to the write buffer on both Nodes 1 and 2. This is always the case because writes occur on both nodes simultaneously.

Default Stretched Cluster / 2 Node Read Behavior

Default Stretched Cluster / 2 Node Read Behavior

By default, reads are only serviced by the host that the VM is running on.

The image to the right shows a typical read operation. The virtual machine is running on Node 1 and all reads are serviced by the cache device of Node 1’s disk group.

By default, reads do not traverse to the other node. This behavior is default in 2 Node configurations, as they are mechanically similar to Stretched Cluster configurations. This behavior is preferred when the latency between sites is at the upper end of the supported boundary of 5ms round-trip-time (RTT).

This is advantageous in situations where the two sides of a Stretched Cluster are connected by an inter-site link, because it removes additional overhead of reads traversing the inter-site link.

2 Node Reads after vMotion

2 Node Reads after vMotion

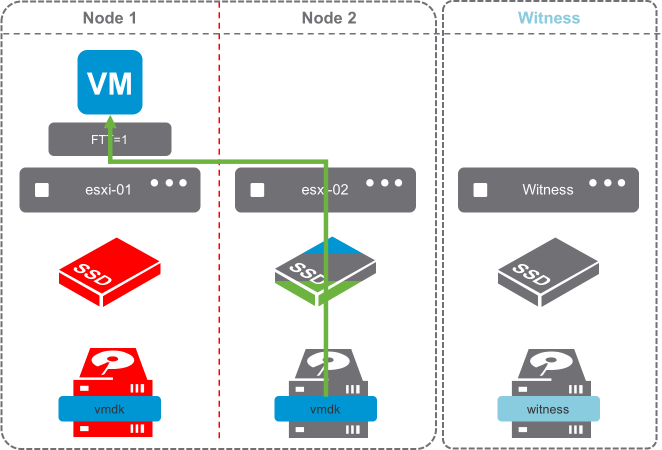

Read operations after a vMotion, are going to behave differently.

Because the cache has not been warmed on the host the virtual machine is now running on, reads will have to occur on the capacity drives as the cache is warmed.

The image to the right shows only part of the cache device as green, indicating that as reads occur, they are cached in the read cache of the disk group.

The process of invoking a vMotion could be from various DRS events, such as putting a host in maintenance mode or balancing workloads. The default Stretched Cluster recommendation, is to keep virtual machines on one site or the other, unless there is a failure event.

2 Node Reads after a Disk Failure

2 Node Reads after a Disk Failure

Read operations after a disk failure, are going to behave similarly to those of a vMotion. A single disk in the disk group has failed in the image on the right. Reads are going to come from Node 2, and the cache device on Node 2 is going to start caching content from the virtual machine’s disk.

Since only a capacity device failed, and there are others still contributing to the capacity, reads will also traverse the network, as data is rewritten to one of the surviving capacity devices on Node 1, if there is sufficient capacity.

Once data has been reprotected on Node 1, the cache will have to rewarm on Node 1 again.

2 Node Reads after a Cache Device/Disk Group Failure

2 Node Reads after a Cache Device/Disk Group Failure

Read operations after a disk group failure, are also going to behave like that of a disk failure.

In configurations where the host with a failed disk group has an additional disk group, rebuilds will occur on the surviving disk group provided there is capacity.

In hosts with a single disk group, rebuilds will not occur, as the disk group is unavailable.

What can be done to prevent the need for rewarming the read cache?

There is an advanced setting which will force reads to always be serviced by both hosts.

The “/VSAN/DOMOwnerForceWarmCache” setting can be configured to force reads on both Node 1 and Node 2 in a 2 Node configuration.

Forcing the cache to be read across Stretched Cluster sites is bad, because additional read latency is introduced.

vSAN 2 Node configurations are typically in a single location, connected to the same switch, just as a traditional vSAN deployment.

When the /VSAN/DOMOwnerForceWarmCache setting is True (1), it will force reads across all mirrors to most effectively use cache space. This means reads would occur across both sites in a stretched cluster config.

When it is False (0), site locality is in effect and reads are only occurring on the site the VM resides on.

In short, DOM Owner Force Warm Cache:

- Doesn’t apply to traditional vSAN clusters

- Stretched Cluster configs with acceptable latency & site locality enabled – Default 0 (False)

- 2 Node (typically low very low latency) – Modify 1 (True)

Not only does this help in the event of a virtual machine moving across hosts, which would require cache to be rewarmed, but it also allows reads to occur across both mirrors, distributing the load more evenly across both hosts.

To check the status of Read Locality, run the following command on each ESXi host:

esxcfg-advcfg -g /VSAN/DOMOwnerForceWarmCache

If the value is 0, then Read Locality is set to the default (enabled).

To disable Read Locality for in 2 Node Clusters, run the following command on each ESXi host:

esxcfg-advcfg -s 1 /VSAN/DOMOwnerForceWarmCache

Here is one-liner PowerCLI script to disable Read Locality for both hosts in the 2 Node Cluster.

|

1

|

Foreach ($VMHost in (Get-Cluster -Name (Read-Host “Cluster Name”) |Get-VMHost)) {Get-AdvancedSetting -Entity $VMHost -Name VSAN.DOMOwnerForceWarmCache | Set-AdvancedSetting -Value ‘1’ -Confirm:$false}

|

Forcing reads to be read across both nodes in cases where the Number of Failures to Tolerate policy is 1 can prevent having to rewarm the disk group cache in cases of vMotions, host maintenance, or device failures.

Client Cache in 6.2

vSAN 6.2 introduced the Client Cache feature for additional read caching at the host, regardless of where the virtual machine’s disks reside.

In the event a host has to traverse the inter-node link on a 2 Node configuration, the Client Cache will provide some read caching relief.

In the event of a vMotion of the virtual machine, regardless of the DOM Owner Force Warm Cache setting, the Client Cache would be rewarmed. This is the same behavior in a traditional vSAN configuration.

Wrapping it up.

2 Node configurations, while mechanically similar to Stretched Clusters, often are deployed in the same physical location.

Using the advanced parameter of /VSAN/DOMOwnerForceWarmCache, 2 Node vSAN configurations can be configured to have read operations behave similarly to a normal vSAN configuration.

Forcing reads to be read across both nodes in cases where the Number of Failures to Tolerate policy is 1 can prevent having to rewarm the disk group cache in cases of vMotions, host maintenance, or device failures. When implemented, this feature changes the default recommendation for vSphere HA and DRS, in keeping virtual machines running on either site, but not across sites.

Update in the vSAN 6.7 U1 Interface

The updated vSphere Client for vSphere 6.7 U1 now provides the ability to make this setting change in the vSAN Advanced Options interface.

Using the Advanced Option interface, disable Site Read Locality to achieve the same behavior as changing the /VSAN/DOMOwnerForceWarmCache advanced setting to 1.

This was originally published on the VMware Virtual Blocks site: https://blogs.vmware.com/virtualblocks/2016/04/18/2node-read-locality/