As a vSpecialist, I am a member of a group that focuses on Big Data solutions. I primarily focus on Big Data storage, notably Isilon. I was introduced to Isilon early last year and really liked it.

I got my first deep-dive with Isilon before EMC World 2011, where I was given the Isilon vLab, and asked to finish it up. I took the content that the Isilon team had provided, made some subtle changes, and polished it up a bit. The one thing that I found, was that Isilon was EASY to use. I was fortunate enough to use Isilon more throughout the year in 2011, as well as adding Isilon to the VMware Partner Labs at VMworld 2011.

The first time I configured Isilon in the lab for use by vSphere (4.1 then), I didn’t really know what the best practices were. Fortunately, in January (2012), Isilon published a white paper on using Isilon with VMware vSphere 5. That paper can be found here: http://simple.isilon.com/doc-viewer/1739/best-practices-guide-for-vmware-vsphere.pdf. Information on configuring NFS starts at page 15.

This post is a high level overview when using vSphere 5.0 and NFS storage from an Isilon cluster.

Mounting cluster datastores on a single node

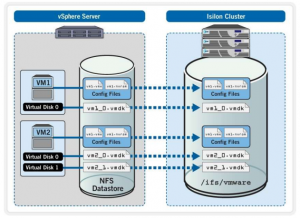

As nodes are added to an Isilon cluster the shared resources can be accessed from any of the nodes. The default root (/ifs) is available to all nodes in the cluster. From a basic perspective, a single node’s IP address could be used to access one or more NFS exports.

As nodes are added to an Isilon cluster the shared resources can be accessed from any of the nodes. The default root (/ifs) is available to all nodes in the cluster. From a basic perspective, a single node’s IP address could be used to access one or more NFS exports.

In a situation where a node were to be taken offline for maintenance, or were to fail, datastores connected to a dedicated IP address would fail, unless SmartConnect Advanced was being used.

Using SmartConnect Advanced in this manner, only accommodates for a node going offline, but does not handle any additional throughput. With all NFS datastores being mounted to a single node, throughput is limited to that with the specified node can provide.

SmartConnect for accessing NFS shares

Before going into the different methods of connecting to NFS shares for vSphere datastores, it is important to know how SmartConnect works. SmartConnect is the Isilon feature that handles connection availability and load balancing for Isilon clusters.

I used to think that the coolest thing about Isilon, was that nodes can be added “on the fly”:

In the video, as nodes are added the amount of space is automatically expanded, but so are the number of front-end ports.

With a traditional NAS target, as storage is expanded, the front-end ports are not. A typical NAS device/array will have a finite number of ports, that can only be expanded upon, given the capabilities of the NAS controller/head/etc. With Isilon however, more nodes are added, more front-end ports are too. SmartConnect provides the ability to better manage the cluster availability and throughput across many nodes/interfaces. I think SmartConnect is really the coolest part of the mix, once I got to know more about it.

SmartConnect Basic handles Round Robin IP distribution as nodes are added to a cluster, while SmartConnect Advanced handles advanced IP distribution as nodes are added, removed, or are unavailable, to the cluster.

In addition to managing IP addresses for a cluster, through DNS delegation, SmartConnect provides IP addresses for address resolution when connecting to a FQDN, rather than an IP address.

SmartConnect Basic has the following features:

- Static IP allocation to nodes

- Connection Policy Algorithms

- Round Robin (which node is next)

- No Rebalancing

- No IP Failover

SmartConnect Advanced has the following features:

- Dynamic IP allocation to nodes

- Connection Policy Algorithms

- Round Robin (which node is next)

- Connection Count (which is next given connections each node has)

- Network Throughput (which is next given the amount of throughput each node has)

- CPU Usage (which is next given the amount of CPU usage each node has)

- Automatic or Manual Rebalancing

- IP Failover Policy

- Round Robin (which node is next)

- Connection Count (which is next given connections each node has)

- Network Throughput (which is next given the amount of throughput each node has)

- CPU Usage (which is next given the amount of CPU usage each node has)

SmartConnect Advanced, adds the ability to easily bring nodes offline for maintenance, as well as handle failure, while maintaining availability of IP based services such as NFS exports.

Mounting datastores on different nodes

One of the biggest benefits of Isilon, is the fact that a single namespace can scale to over 15PB. From a file system and management perspective, that’s pretty awesome, but from a connection perspective, a limited number of connections to a single node could easily be a bottleneck.

One of the biggest benefits of Isilon, is the fact that a single namespace can scale to over 15PB. From a file system and management perspective, that’s pretty awesome, but from a connection perspective, a limited number of connections to a single node could easily be a bottleneck.

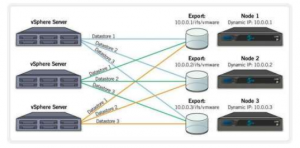

Spreading the connections to each NFS export across nodes will increase the throughput in comparison to all connections on a single node. As can be seen in this graphic, there are 3 hosts connected to 1 Isilon cluster, with 3 datastores (NFS exports), on 3 different IP addresses. Each of the Isilon nodes has a dynamic IP address assigned to it.

This configuration dedicates traffic for each NFS export to a dedicated IP address. This isn’t to be confused with a dedicated node.

With SmartConnect Advanced, in the event of node going offline from maintenance or failure, one of the remaining nodes will service the request for the IP address that failed over.

With SmartConnect Advanced, in the event of node going offline from maintenance or failure, one of the remaining nodes will service the request for the IP address that failed over.

SmartConnect Advanced can appropriately move the failed IP address to another node based on any of the Connection Policies mentioned above. This ensures the best distributed load to the nodes are available.

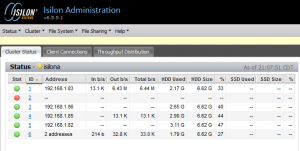

Update: Here are a few screen shots from my Isilon lab…

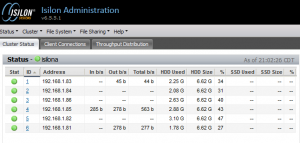

6 Node Isilon Cluster |

Failed node with only SmartConnect Basic Notice Node 2’s IP address doesn’t fail over Notice Node 2’s IP address doesn’t fail over |

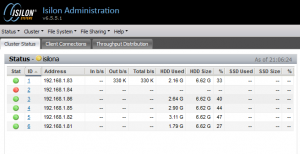

Failed node with SmartConnect Advanced Notice Node 6 takes 2 addresses Notice Node 6 takes 2 addresses |

Notice that with SmartConnect Advanced the failed IP address moves to another node. In this case, node 6 is now answering for 2 different IP addresses.

More information

The white paper (http://simple.isilon.com/doc-viewer/1739/best-practices-guide-for-vmware-vsphere.pdf) has this information and more about the best practices when using Isilon (NFS/iSCSI) and VMware vSphere 5.0.

Good article, Jase. Explains a lot about how this works, and seems to temper many of the shortcomings of NFS v3 on vSphere.

Hi Jase.

Just wanted to let you know that an updated version of the Isilon-vSphere 5 best practices guide was published in April. It adds more links to useful documentation and corrects a few errors that I found after the initial publication. It can be downloaded from the Isilon online library here:

http://simple.isilon.com/doc-viewer/1517/best-practices-guide-for-vmware-vsphere.pdf

James Walkenhorst

Solutions Architect, Virtualization

EMC – Isilon Storage Division

James,

I’ve updated the links.

Great stuff!

Thanks,

Jase

Jase, Outstanding write up, enjoyed the informative, yet lite approach you took to explaining the technology!

Hi Friends ,

I have a doubt , is this ISILON will support to create luns like DMX & VMAX and assign the luns to different hosts , pl clarify at tha earliest .

Thanks

Nag

Isilon will allow you to create iSCSI LUNs, but not Fibre Channel based LUNs. I’ll see if I can post an article on how to configure iSCSI on Isilon.

FYI, the link for the whitepaper referenced herein no longer works:

http://simple.isilon.com/doc-viewer/1517/best-practices-guide-for-vmware-vsphere.pdf

One that works is:

http://simple.isilon.com/doc-viewer/1739/best-practices-guide-for-vmware-vsphere.pdf

btw, the link in the EMC Documents Library also doesn’t work, it points to the obsolete version 1517 that doesn’t exist but what gave me a clue to find the current correct version was a comment that said “INTERNAL NOTE: out of date duplicate, redirect to 1739” so I changed the URL to use that number and then it worked!

Thanks for the update!

Hi there, I really liked reading your article. Its very informative. I had a question for you regarding Latency. We have a 7 node x200 cluster hooked up to 4 cisco UCS blades and are having some serious issues with latency. At the moment we have 3 NFS datastores on 3 different nodes on the isilon. These are added to vsphere5 using individual ip addresses and not smartconnect. The main use of our isilon cluster is SMB which is working perfectly. But our latency number for NFS traffic on Vsphere is astronomical upwards of 300ms….. Isilon support is focused on troubleshooting at the cluster alone. I was wondering if you ever came accross something like this or have any suggestions. Thanks in advance.!!!

I have heard of some incidents with latency, but don’t have any specifics around them.

I’d highly recommend SmartConnect Advanced if you are using vSphere 5, as it can give you some flexibility on the loading.

Keep in mind that there are some things that you need to ensure… The datastore/NFS mounts/and VMs need to be set to Random (IIRC), as well as a couple other settings, based on the best practices guide. I don’t have a cluster in front of me, so I don’t want to give you the wrong recommendations.

I’d still go with Isilon support’s recommendations, and yes, troubleshooting at the cluster level would be first place to start.

Also, what version of OneFS are you running? 6.5? 6.0? OneFS 7.0 was just recently released, and provides some better NFS performance.

Cheers,

Jase

Thank Jase, We have looked at every hop between VMs and Isilon, Access layer switch, core, UCS… Cant think of anything else. We are comming to the conclusion that the application we are trying to run needs something faster than the isilon.

Depending on the type of workload, Isilon can or can not be the best fit.

Highly transactional workloads typically aren’t the best fit.

The latest recommendations I have seen are for Tier 3/Tier 4 VM workloads.

Also, what build are you guys running? OneFS 6.5.5.x?

You might want to look at OneFS 7.0 as it is a good bit faster, especially in VMware environments.

How will a major update on the cluster which requires the cluster to be re-booted affect the VM Farm? will you have to bring down all of your guests. we have over 1K guest and bringing them all down for any reason is a problem.

Thanks,

K2

K2,

For an upgrade that requires a cluster reboot, the VM’s would have to be powered off, or moved to arrays/NAS that remain online. This is the same whether Isilon is the backend, or another array/NAS from any vendor.

Minor upgrades that do not require a reboot, but only node reboots, do not require this.